Loading...

Loading...

Large language models (LLMs) are highly valuable tools for researching security issues. While their utility is often attributed to their training on vast code repositories, their real strength lies in their ability to assist with the complex task of identifying subtle vulnerabilities, like finding a needle in a haystack.

This capability recently made headlines when security researcher Sean Heelan demonstrated something unprecedented. Using nothing more than OpenAI's o3 model and its API—no custom tooling, no agentic frameworks—he discovered CVE-2025-37899, a previously unknown zero-day vulnerability buried deep within the Linux kernel's SMB implementation. The AI analyzed over 12,000 lines of code and identified a critical use-after-free vulnerability that requires understanding complex concurrent connections and thread interactions.

Zero-day exploits are urgent as the vendor has not yet had an opportunity to assess the vulnerability and react with a fix or mitigation. As a security team, you have to verify the defence-in-depth implementation while you wait for the patch.

The author researched a vulnerability and experimented with OpenAI o3’s capability to identify user-after-free (UAF) issues. Voila, with some success, o3 not only found the issue being analyzed but also found another issue.

In this particular evaluation, o3 demonstrated 2-3x superior performance compared to Sonnet 3.7, marking a significant leap in AI-assisted vulnerability detection. The unexpected win of this research was the quantitative improvement, but the qualitative insight of o3. Having an AI assistant that can spot critical security issues lurking outside your research focus opens entirely new possibilities for proactive security analysis.

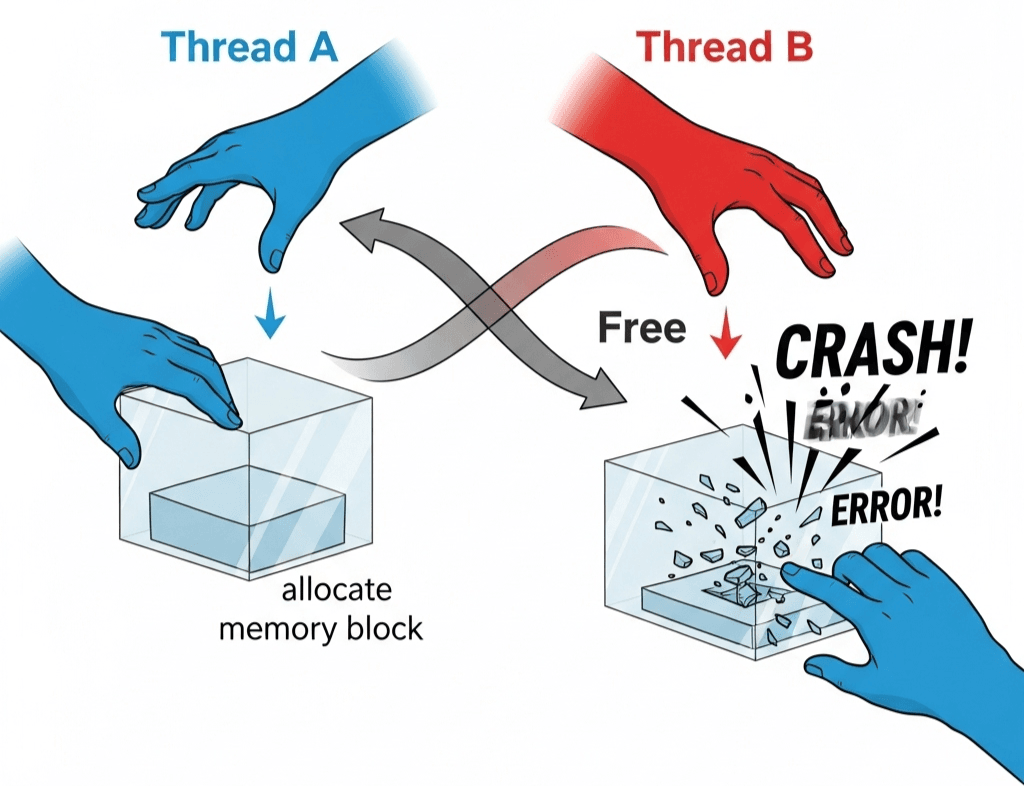

The vulnerability happens due to a race condition in the ksmbd module (kernel SMB implementation). When handling the SMB2 LOGOFF command, one thread may free an object while another thread, possibly from a different connection, continues to access it. This lack of proper synchronization can lead to memory corruption, potentially allowing attackers to exploit this.

The answer is complicated; most code analyzers require runtime to analyze complex issues like use-after-free, and it is not always feasible to get the ideal runtime for things like kernel, firmware, IOT device, etc. Even though the problem is not complex, these issues go undetected in traditional software vulnerability assessments.

Not all use-after-free issues are the same, and there is no guarantee that one will be able to find all these issues using SAST/DAST tools. Tools like AddressSanitizers can be a good option to identify these, but simulating real-world memory usage is non-trivial.

o3 was able to detect this 8 out of 100 runs, and also 28 of those runs resulted in false positives. Maybe one would take these chances over nothing as a researcher. But these are not good numbers for somebody who needs to keep the vulnerability detection pipeline predictable. Even though this particular problem is not complex, these issues go undetected in traditional software vulnerability assessments.

While there is great potential in LLM models to be an assistant in many computer security fields, the right models, correct evaluations, and correct contexts are necessary to achieve usable guarantees when applying these models. The test by Sean Heelan shows the potential of LLMs, but also highlights drawbacks that need to be overcome.

We at Simbian continuously evaluate various models with various cybersecurity use cases to decide which models are best suited to specific use cases. Simbian’s VRM and CTEM agents are designed to work with a curated organizational context (context lake) mixed with our proprietary AI models.

Simbian’s AI Agents can not only help identify potential vulnerabilities but also help classify/prioritize already detected vulnerabilities for engineering teams. These agents can also provide mitigations and test vectors even before the vendor can roll out the patch.

AI + Human researchers, developers, and security professionals can achieve greater efficiencies in identifying and remediating complex vulnerabilities. Stay tuned for a great deep dive into how LLMs are shaping the future of cybersecurity.

Meanwhile, AI Vulnerability Analysts aren’t a distant fantasy. Simbian automates vulnerability assessment/prioritization, slashes mitigation times, and turns security personnel into cyber superheroes. The question isn’t if AI will transform your Security Teams- it’s how soon you’ll harness its power.